This blog post is part of the ‘From CERN to Cledar’ series by Cledar founders Hubert Niewiadomski and Piotr Nyczyk, about how their combined 15-year experience at CERN – one of the world’s largest research institutes – shaped the vision and values that make Cledar unique.

When I learned that, despite everybody’s best efforts, some of the measurements from the Large Hadron Collider (LHC for short) were still months away from being genuinely useful to the science community, I realized that I had to act and I had to think differently to find a solution. This is the story of how we (me and my colleagues at the time) anticipated physics that had never been measured before to accelerate scientific advancement at CERN.

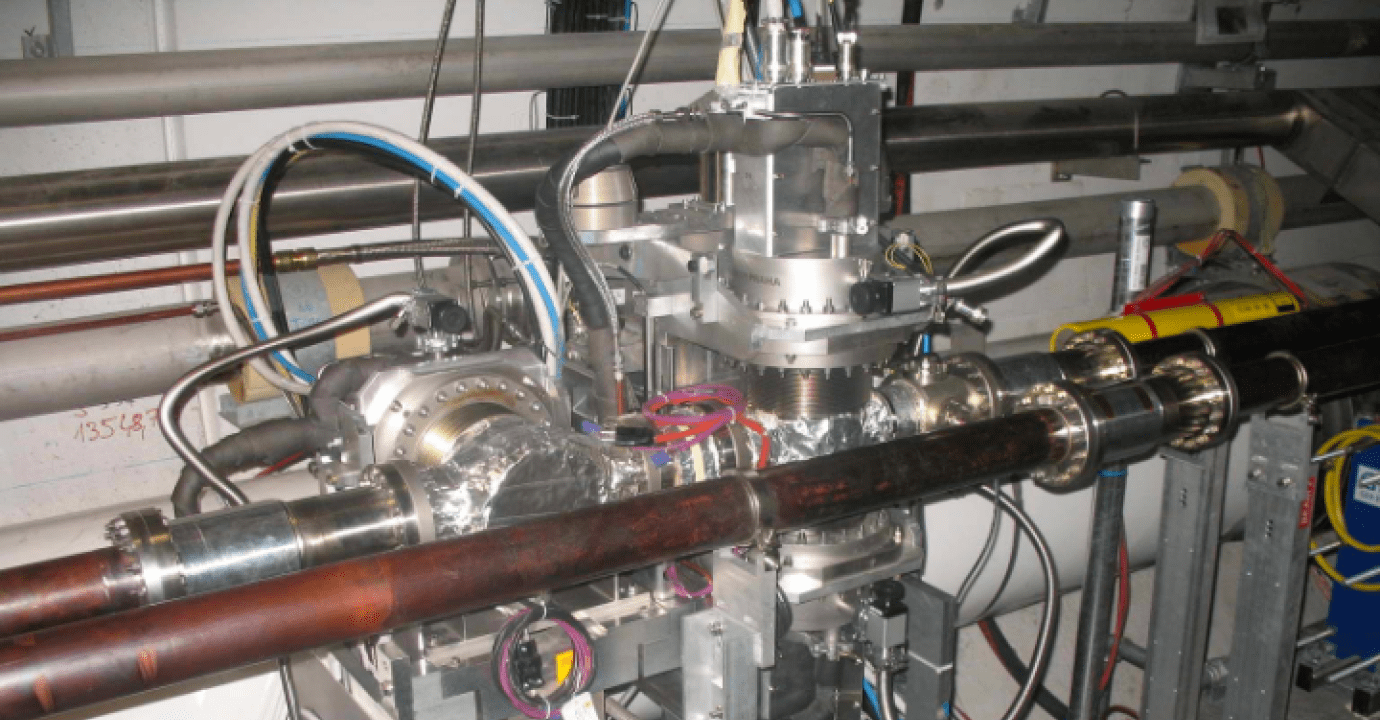

The LHC is at the heart of CERN. Its purpose is to accelerate particles that are flying at almost the speed of light in opposite directions. The idea is that these particles should collide and for the data about these collisions to be captured so that scientists can better understand the forces that drive and shape the universe.

When explaining this to non-scientific colleagues, such experiments can seem so abstract and detached from our daily lives. And yet the findings they generate are incredibly relevant to how the world works today and to the scientific and medical advances that we take for granted and will be reliant on in the future (in ways we don’t yet know). Tumor detection, the x-ray, CT scans, nuclear … we rely on the science behind these technologies every day and they will continue to contribute to the health of mankind and, potentially, the planet. Yep, it’s pretty important stuff.